Find3D: Localizing Semantic Concepts in the 3D Space

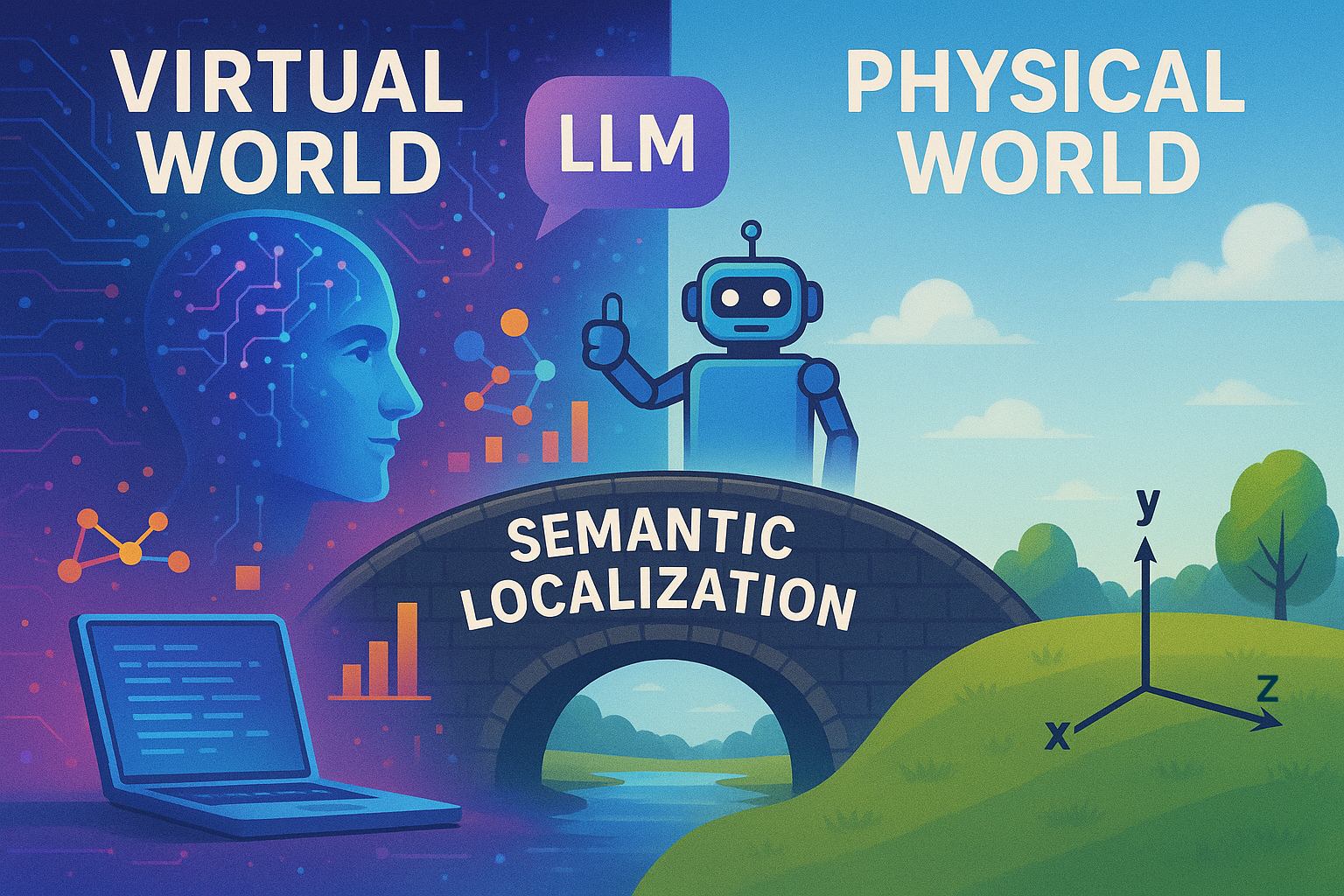

Intelligence in the virtual world can live in the form of language, yet the physical world is different – it is described by physical coordinates of x,y, and z. To build a bridge between the two, in the simplest sense, means being able to pinpoint where in (x,y,z) a semantic concept corresponds to. I believe that localizing semantic concepts in space is the first step towards enabling physical intelligence. This is what Find3D sets out to solve.

Constraining the solution space - key design decisions

Of course, localization cannot be solved in one single project. We make a few design decisions to focus on the most critical setting:

- Work on the point cloud representation: point clouds are easily accessible with depth sensors in robotic applications, and VGGT-type methods yield point maps easily.

- Focus on object parts - manipulation-type tasks require understanding below the object level - e.g. lifting the handle. Parts are closely related to affordance, yet visual understanding beyond the object granularity has been much less studied than object-level understanding.

- Semantic localization should be 3D-native rather than 2D-lifted. Why?

- We embrace the bitter lesson, and want to go for a scalable data strategy and a scalable architecture that can keep leveraging the growing data.anularity has been much less studied than object-level understanding.

The data strategy

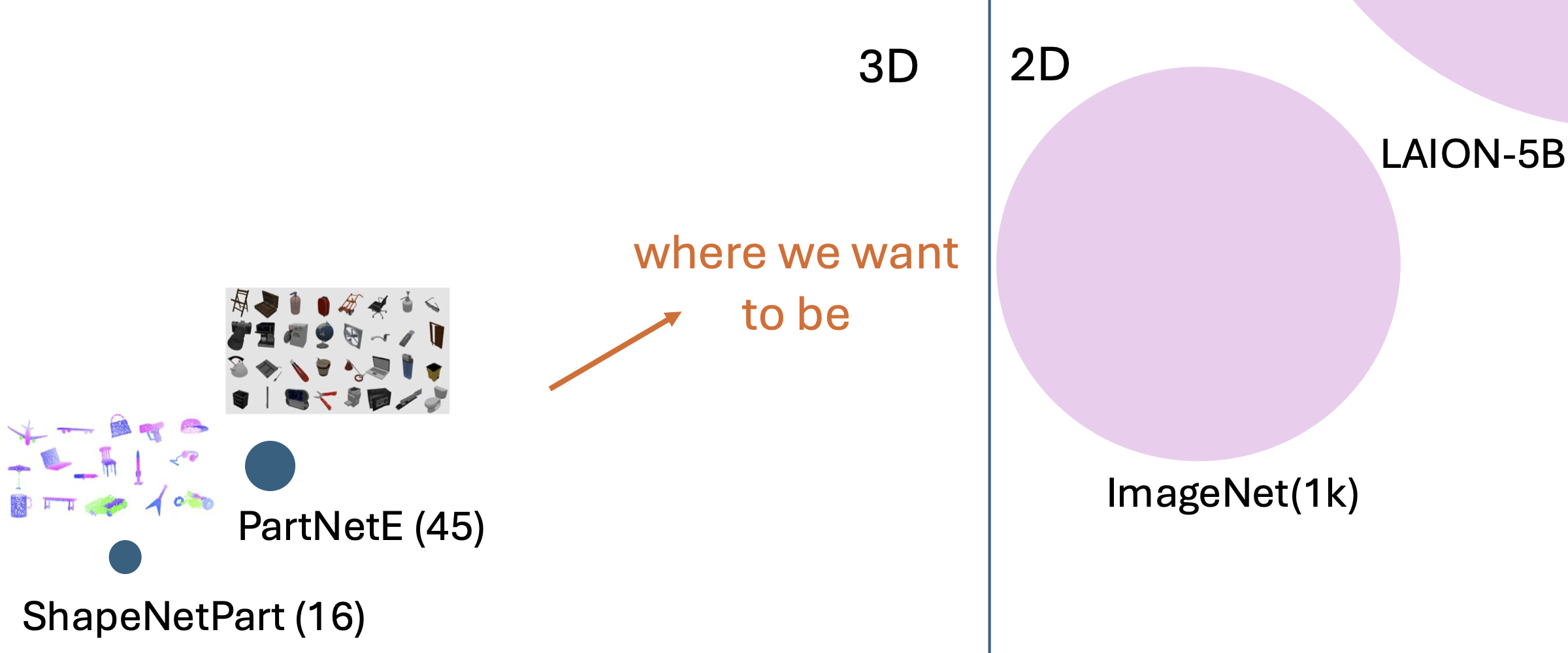

Now we know that we want a 3D native model for localizing concepts on the part level, and one that works generally (rather on a toy set of objects). The question is how. While we have small benchmarks and datasets for segmentation such as ShapeNetPart and PartNetE, they are very constrained in their domains. We do not have annotated data for general objects. As shown in the figure below, the data volume in 3D is in no way comparable with 2D, not to mention language.

While we have a data shortage in 3D, we do have abundant data sourced from the Internet, which has led to incredibly powerful VLMs with visual understanding capabilities in 2D. I realized that actually we can turn the localization problem into a recognition problem by building a data engine.

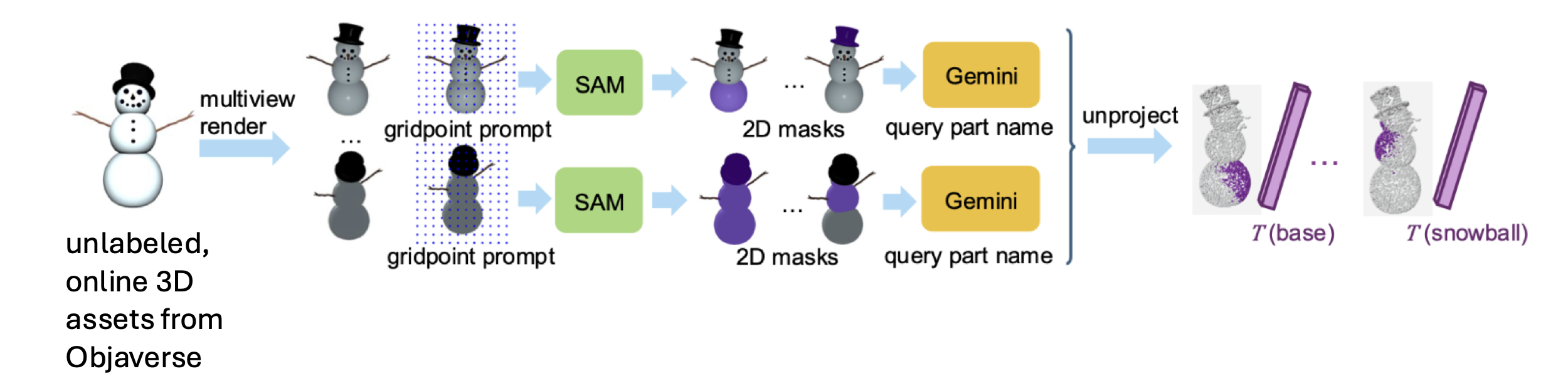

The data engine starts by rendering unlabeled online 3D assets from Objaverse, and obtaining masks by grid-prompting SAM. We want to highlight that existing 2D “part finding” models such as groundedSAM usually misses parts, and thus by grid-prompting we can catch more comprehensive parts, especially ones that are small or seen in less common viewpoints. The goal here is not to have complete coverage for each individual object, but rather to cover a wide range of objects and parts over the full dataset. With proper filtering, we obtain a total of around 200 masks per object (from 10 views). In the next section, I will discuss how to leverage such powerful (but noisy) data.

By highlighting parts with colored masks, we can pass these overlaid renderings to VLMs such as Gemini to recognize the name of the highlighted part, and use a CLIP-like embedding of the name as the true label for all corresponding points. These masks then get back-projected to 3D to serve as point-level annotations. Because we use language as a medium, we naturally yield diverse labels in 2 senses:

- The language description is diverse, e.g. the body of the telescope is both called a “telescope tube” and “body of a telescope”.

- We get multiple levels of granularity, rather than adhering to one set of manually-defined criteria: e.g. we get “base” of a milkshake as well as the coarser-granularity “milkshake glass”.

Training and scaling the model

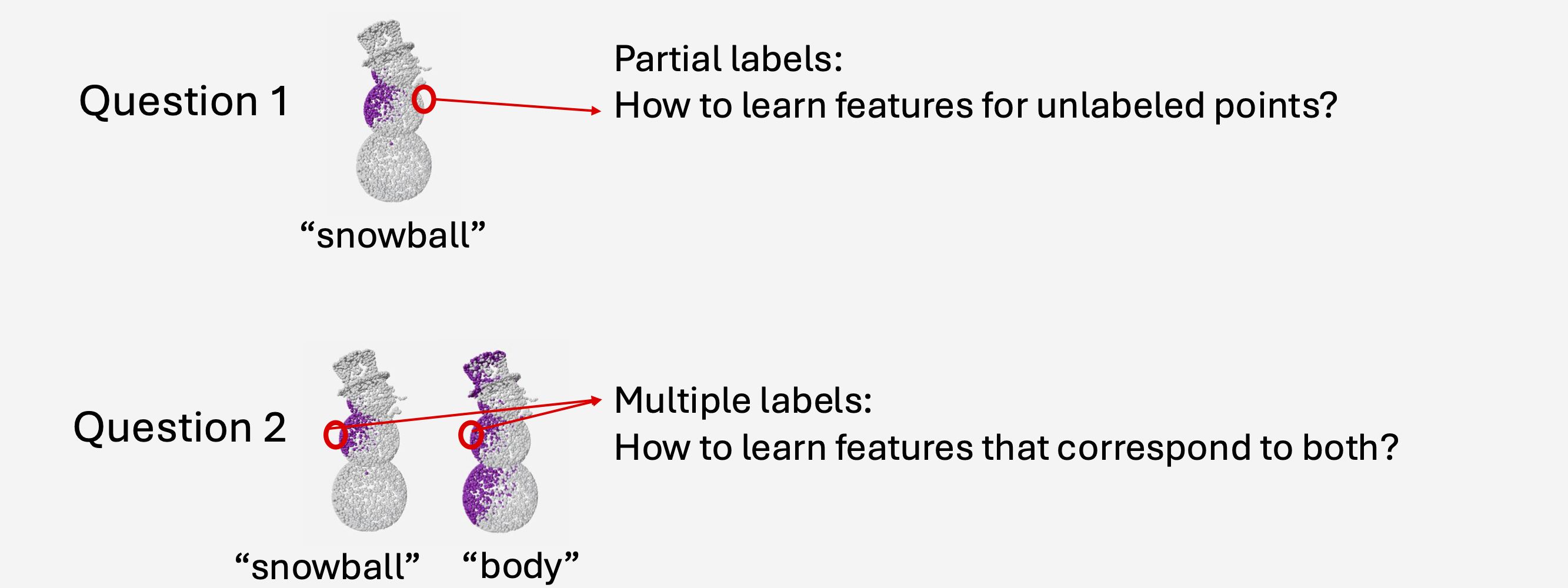

The data from our data engine is very diverse, yet also noisy. Two main challenges in training are seen in the image below:

- As much as we try to make our labels dense, there are points that cannot be covered by our viewpoints/selected masks. We need to find ways to also provide supervision on those points.

- Because our labels might come from different granularities or come under different descriptions, each point could fall under different labels (either from the same view or from overlapping views). We need to reconcile that.

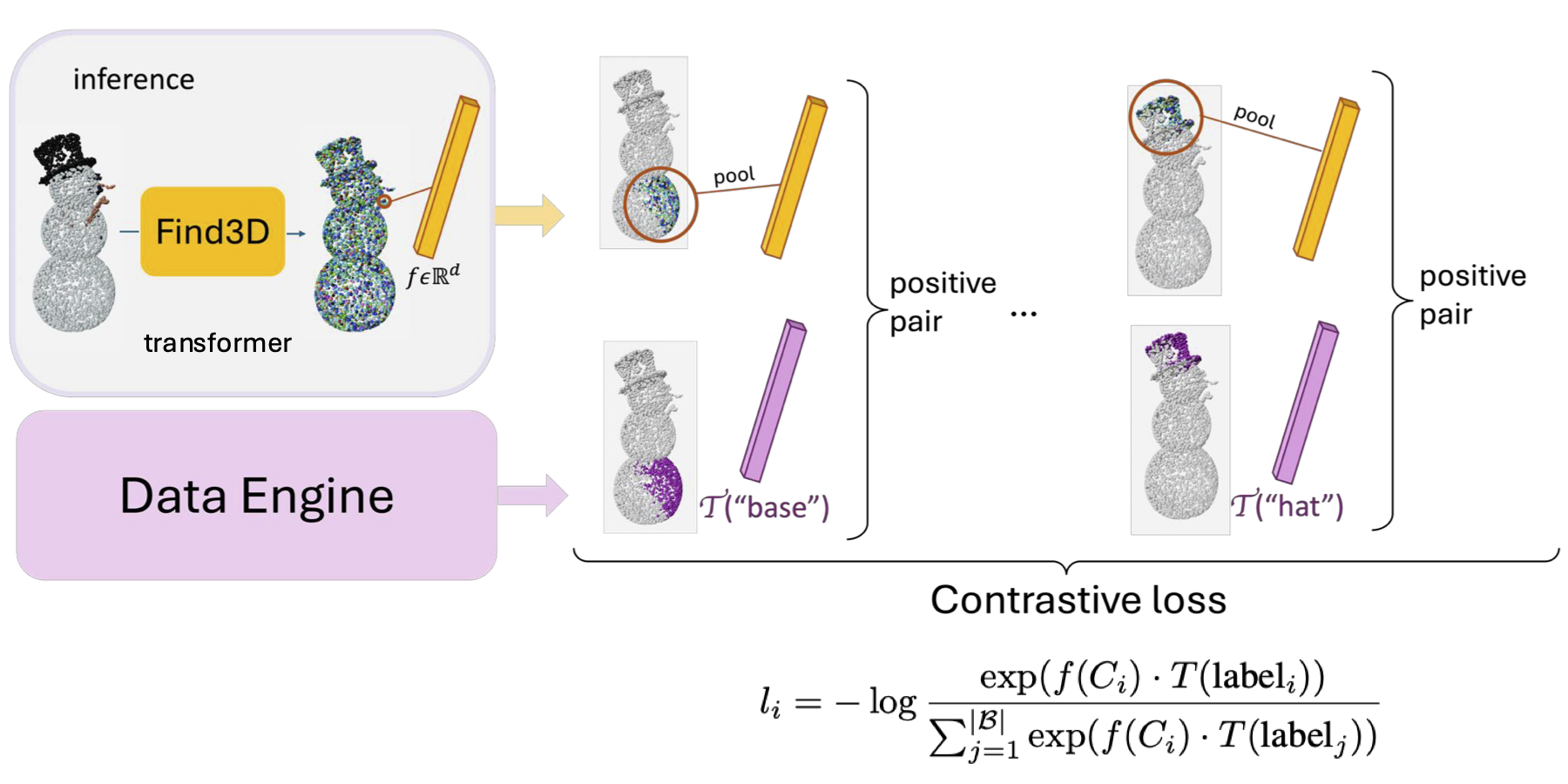

In computer vision, we have a long history of dealing with large-scale, noisy data. We follow a contrastive approach as shown below:

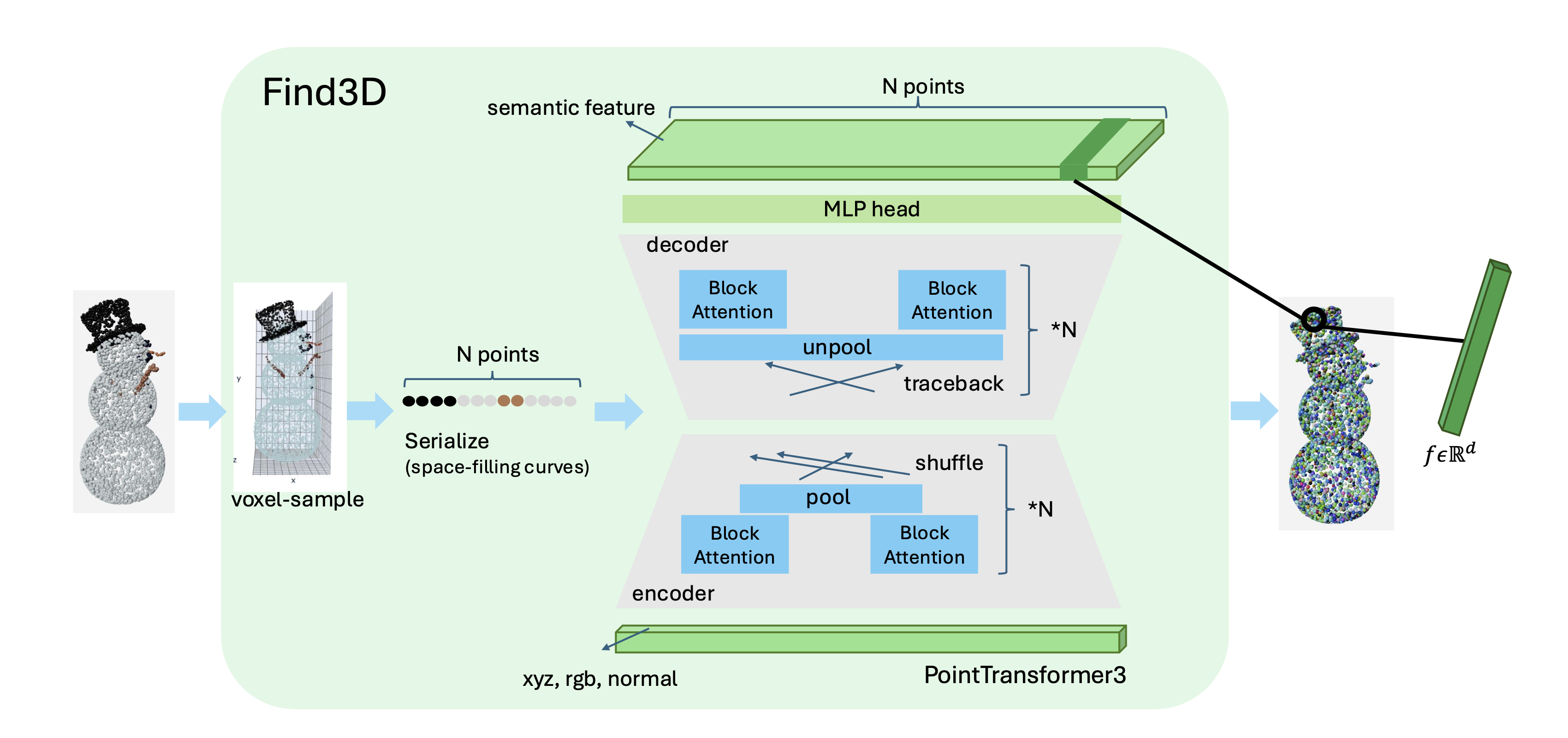

For each (asset, label) pair from the data engine, we pair the pooled point features corresponding to the label’s backprojected points with the actual label feature as a positive pair. We aggregate over objects to get around 3k positive pairs per batch. Architecturally, we adopt the PT3 architecture to be able to learn geometric prior over points, as shown below.

With this formulation, we can address the two challenges mentioned above:

- For unannotated points, given geometric priors learned by our model, they naturally share similar features with points that have similar geometric properties (i.e. points on the same sphere that are annotated).

- For points with multiple labels, their feature is pushed towards all of the supervision labels, so that at inference time we support querying at different granularities or with language descriptions emphasizing various aspects like function or material.

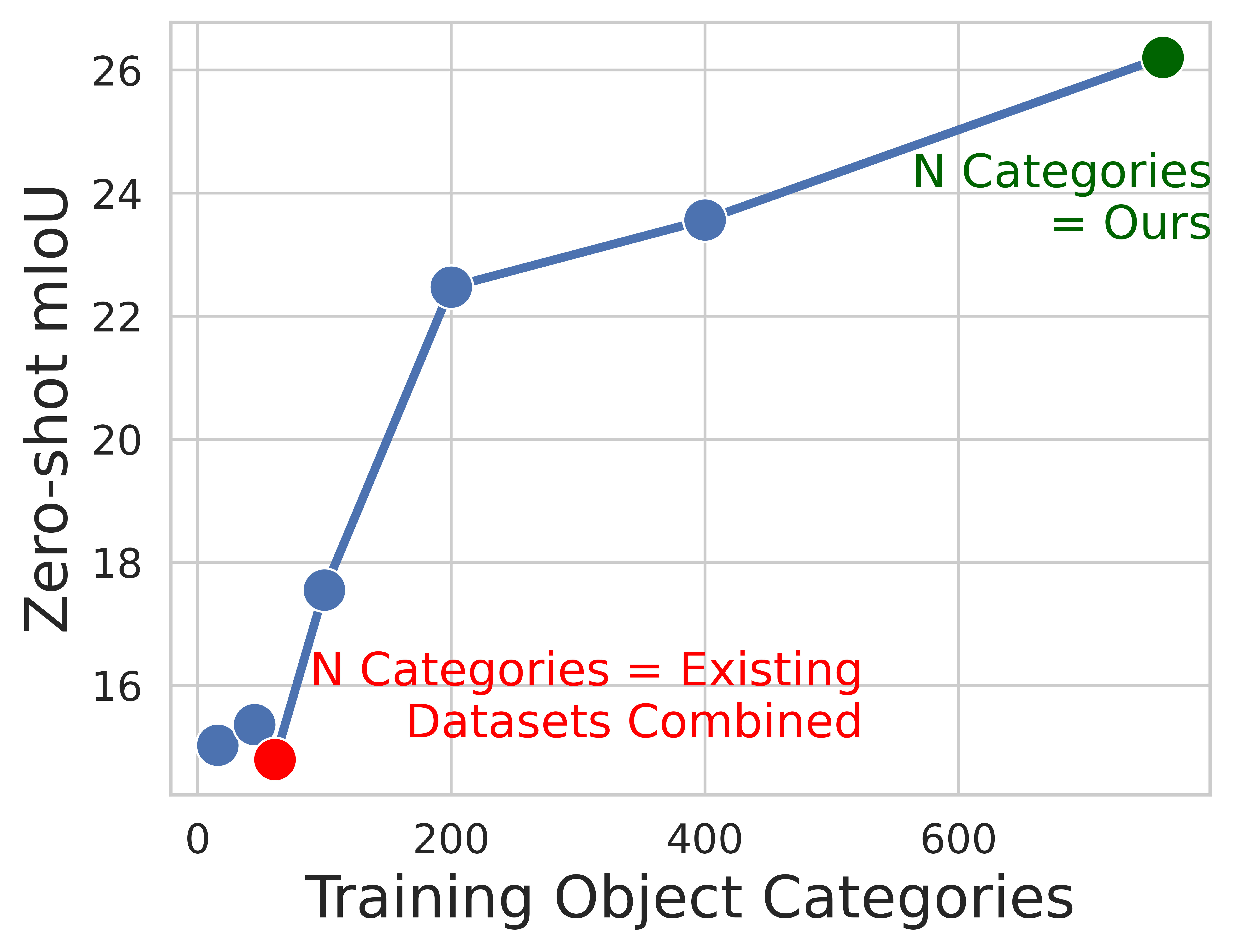

The contrastive objective helps with learning from our large-scale, diverse data. We also observe good scaling behavior as we increase the number of object categories in the training data, as shown in the figure below.

Achieving generalization

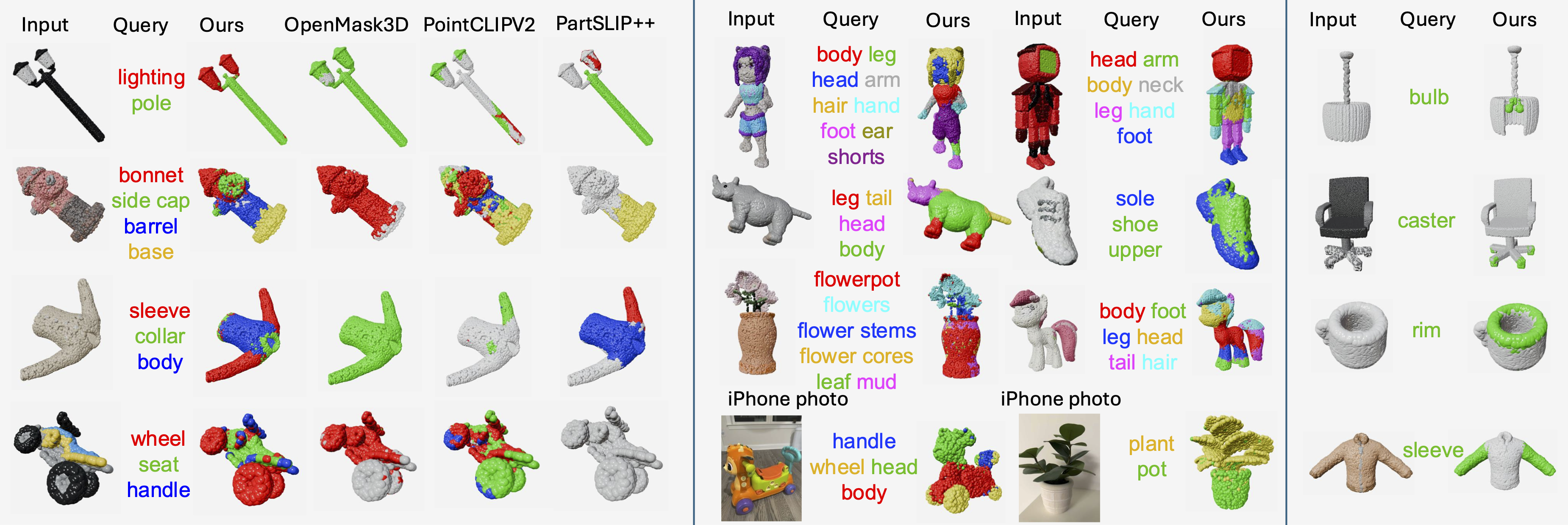

Find3D is able to locate parts on diverse objects, and can even localize challenging parts (rightmost column), such as parts that are difficult to locate in 2D – the light bulb inside the lampshade (occluded almost in every viewing direction), the rim of a mug, or the sleeve of a chromatic shirt (no edge delineation).

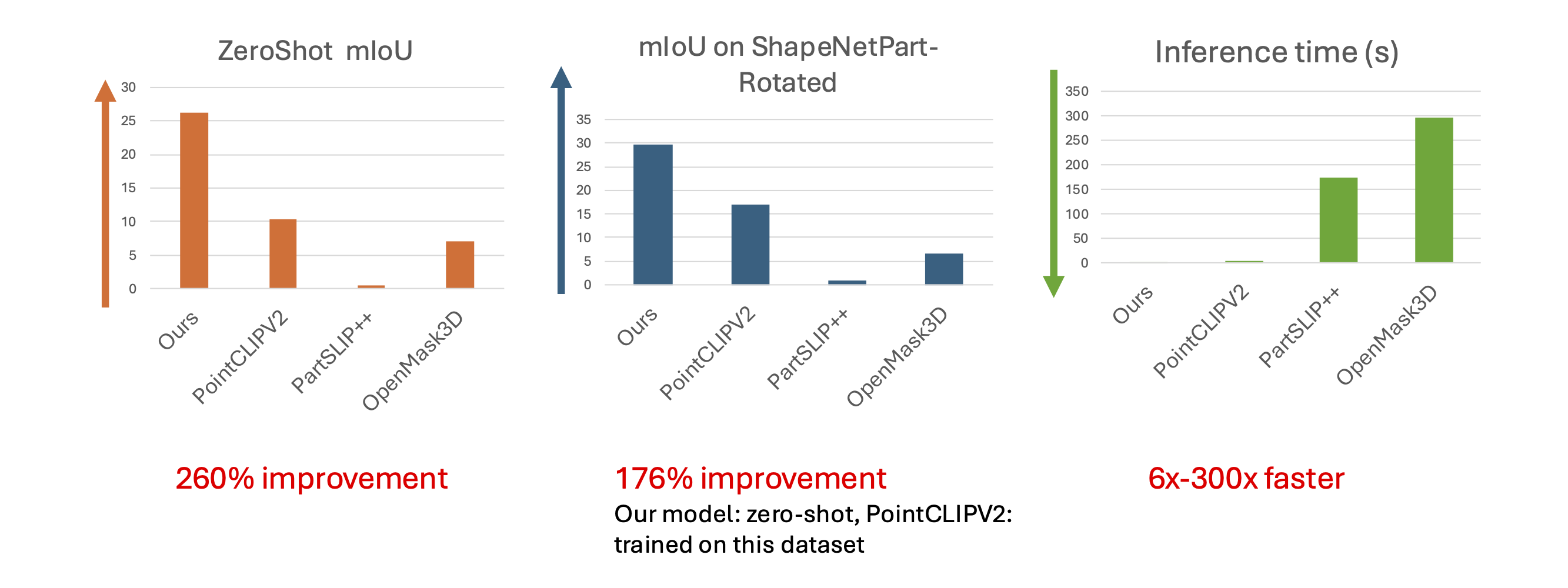

Even on smaller datasets that other methods train on, we achieve better results zero-shot in addition to being much faster.

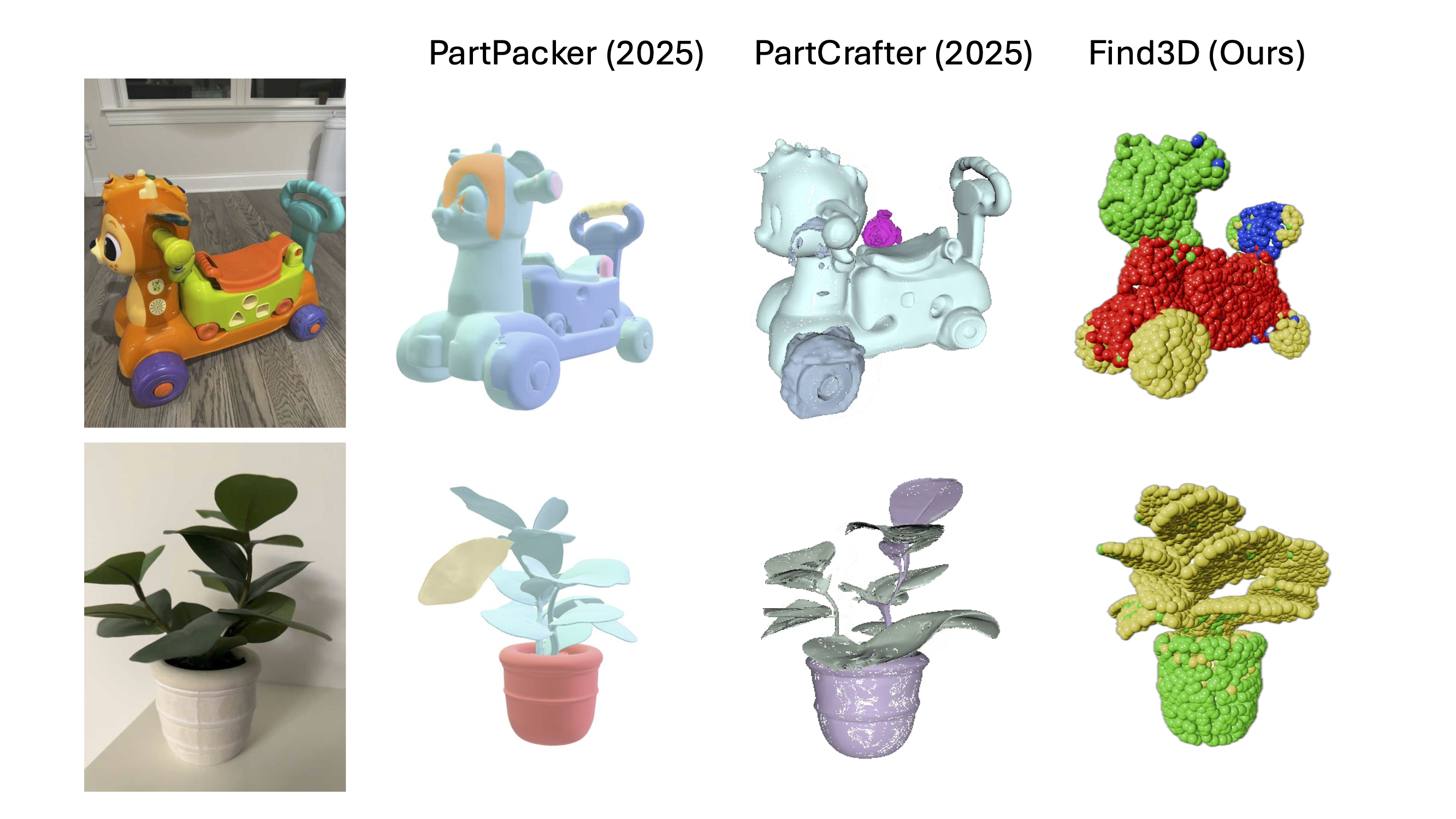

More interestingly, we are able to generalize into the real world in ways that the more recent decomposed generation methods cannot, as shown below.

What’s next?

Going back to the initial motivation, we want to solve semantic part localization because it is the first step towards bridging the current intelligence in language towards the physical space delineated by spatial coordinates x,y,z. A natural next step is to make it really useful in end-to-end robotic tasks, and tackling the more general problem of robotic/reasoning interface. Stay tuned!

Enjoy Reading This Article?

Here are some more articles you might like to read next: